We use artificial neural networks (ANN) for the automated analysis of geometric measurement data, which we individually train for each use case using our own training data. We have a large set of 2D and 3D data we can use to create training and test data. This allows us to tailor object classes and data sets to individual tasks. This approach produces better results than publicly available data sets.

Good training data – better data analysis

Artificial neural networks can only be as good as the data they were trained with. That’s why we’ve developed structures and best practices to make the most of our data: Network / model training preparation consists of four steps:

- Class definition

- Data selection

- Annotation

- Evaluation

The specific data analysis needs of our clients or research partners determine our training process design. When training artificial neural networks, we create a list of object classes that are relevant for the required data analysis. This object class list defines what data is relevant and how it is annotated. Our team defines these classes in workshops with our clients to produce the most efficient output.

Careful data selection and annotation down to the pixel/point: data quality determines the reliability of the model

The next step is to select the required data. The end use application stipulates the requirements for detection robustness. For example, a system that is intended for outdoor, year-round use requires training data from the same or similar sensors that encompass environmental conditions throughout the year. The minimum number of examples of the rarest class determines the total size of the required data set because the required variance and the natural distribution of the classes need to be taken into account.

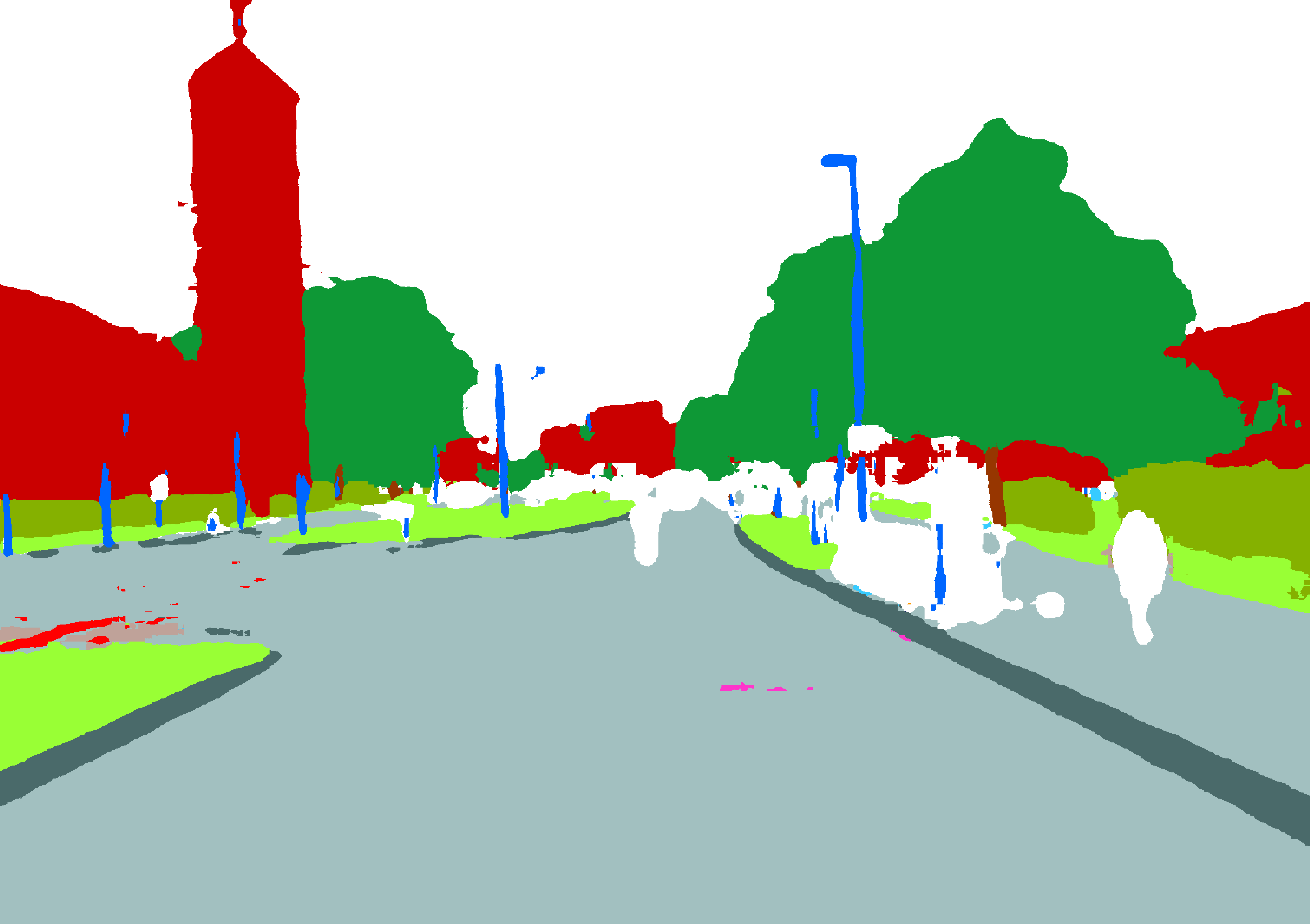

The selected data is then manually annotated, i.e. semantically labeled. The result is a »picture book« which can be used to train or teach the network. This time-consuming process is still state-of-the-art, because the quality of the training data determines the result of the model. Objects that are visible in images or laser scanner point clouds are selected down to the pixel/point according to the annotation class list, assigned to an object class and, where applicable, an instance of that class. We have also tested best practices and apply them for quality control and to ensure an efficient workflow.

Some of the data obtained through this approach is used for evaluating our networks: the ground truth. Test data can be used to test the detection accuracy of the classes and, if possible, identify the parameters that can be adjusted to improve the result.

In addition to this successful method, we’re working on other ways to increase efficiency, such as synthetic training data, automated pre-annotations, combinations between networks and heuristics, and the creation of generic data sets, which can be used as a basis for specific projects with only minor modifications.